NIH and Nihilism

Some thoughts about permacomputing

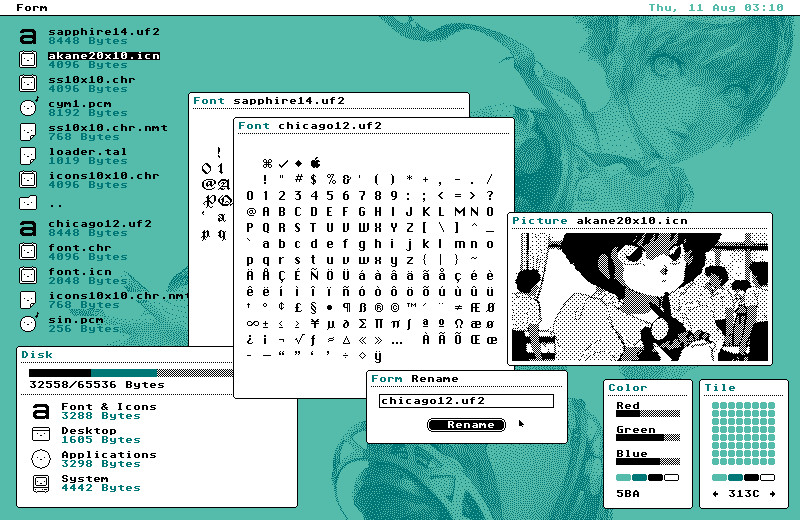

Have you heard of Uxn? If not, check it out: it’s a neat little project. The question it seeks to answer is: can we build a fun and featureful software ecosystem on top of a ‘thin waist’ maximally portable virtual machine?

A previous incarnation of the following commentary appeared on lobste.rs and received criticism, perhaps fairly, for being unduly negative about Uxn - but this is not a criticism of Uxn, it’s a criticism of the value that sometimes gets read into it, and similar projects. I think that Uxn is a beautiful little experiment and I continue to enjoy watching it grow in earnest. You should too.

When I hear about a project like Uxn, I’m always split.

There’s the programmming language theory nerd in me that loves esotericism and Turing Tarpits, and would desperately love to spend a decade rebuilding the entire software (and perhaps even hardware) stack from the bottom up, ““properly””. There’s something magical about the way the universality of computation allows the smallests of seeds to grow into a system that can run any program or perform any computation, with enough time and work.

But then I hear people talk about Uxn as if it represents a solution to the problem of ‘bit-rot’, and a little part of me dies inside. Describing Uxn and its ilk as permacomputing is preservation theatre. It’s a distraction from the real world and actual work of preserving software and improving software resilience, compatibility, and accessibility that so desperately needs attention. That work doesn’t involve pie-in-the-sky miniaturisation, it means:

-

Doing the hard graft of contributing to existing virtual machine and emulator projects

-

Mirroring, archiving, and managing disparate and eclectic data sets and formats of old, many of which refuse to conform to modern sensibilities

-

Fighting for a culture of reliability and backward-compatibility in mainstream software projects so that stuff doesn’t end up breaking

-

Translating, migrating, and maintaining old documentation so that future generations can continue to run old software, not just cosplay running old software by actually running new software, just with a significant performance hit, 8-bit graphics, and an arbitrary 64k memory limit

-

Ensuring that the design of upcoming APIs and standards do not paint themselves into corners that make software that depends on them impossible to run in a decade

Permacomputing efforts are, for the most part, entirely in opposition to the nihilistic ‘burn it all down and start again’ attitude that quietly underpins projects like Uxn. Actually keeping old software running requires dealing with complexity head-on, not wishing it away in frustration. It means not being prescriptive or particular about how your client program chooses to model its environment.

A controversial opinion of mine is that one of the greatest software preservation efforts can be found in Microsoft Windows. We like to think of Windows as ‘bloated’, but we forget that a significant chunk of the reason for this is that Microsoft actually makes a genuine effort to support decades-old software, and even does a half-decent job of it on occasion. I’m always surprised to hear about the sheer effort that goes into keeping 35 year old programs running on Windows. Linux might have retained syscall ABI compatibility, but you need a hell of a lot more than the kernel to run old Linux software.

I think Uxn is beautiful and fun, but I really hope that people reading about it don’t mistake it for something that’s going to save the world from bit-rot. It is a toy, and it will not stop being a toy. If you want to preserve the past, there are no easy shortcuts. And, yes, deciding to reinvent the whole stack is easy in comparison.